|

|

|

Промышленный лизинг

Методички

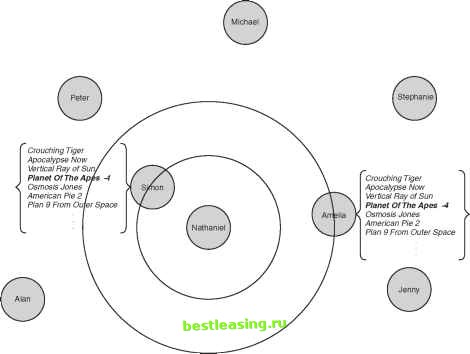

Table 8.16 Confidence with Weighted Voting dsum yes, 100% yes, 100% yes, 69% yes, 76% yes, 62% dEudid yes, 100% yes, 54% no, 61% yes, 52% yes, 60% In this case, weighting the votes has only a small effect on the results and the confidence. The effect of weighting is largest when some neighbors are considerably further away than others. Weighting can also be applied to estimation by replacing the simple average of neighboring values with an average weighted by distance. This approach is used in collaborative filtering systems, as described in the following section. Collaborative Filtering: A Nearest Neighbor Approach to Making Recommendations Neither of the authors considers himself a country music fan, but one of them is the proud owner of an autographed copy of an early Dixie Chicks CD. The Chicks, who did not yet have a major record label, were performing in a local bar one day and some friends who knew them from Texas made a very enthusiastic recommendation. The performance was truly memorable, featuring Martie Erwins impeccable Bluegrass fiddle, her sister Emily on a bewildering variety of other instruments (most, but not all, with strings), and the seductive vocals of Laura Lynch (who also played a stand-up electric bass). At the break, the band sold and autographed a self-produced CD that we still like better than the one that later won them a Grammy. What does this have to do with nearest neighbor techniques? Well, it is a human example of collaborative filtering. A recommendation from trusted friends will cause one to try something one otherwise might not try. Collaborative filtering is a variant of memory-based reasoning particularly well suited to the application of providing personalized recommendations. A collaborative filtering system starts with a history of peoples preferences. The distance function determines similarity based on overlap of preferences- people who like the same thing are close. In addition, votes are weighted by distances, so the votes of closer neighbors count more for the recommendation. In other words, it is a technique for finding music, books, wine, or anything else that fits into the existing preferences of a particular person by using the judgments of a peer group selected for their similar tastes. This approach is also called social information filtering. Team-Fly® Collaborative filtering automates the process of using word-of-mouth to decide whether they would like something. Knowing that lots of people liked something is not enough. Who liked it is also important. Everyone values some recommendations more highly than others. The recommendation of a close friend whose past recommendations have been right on target may be enough to get you to go see a new movie even if it is in a genre you generally dislike. On the other hand, an enthusiastic recommendation from a friend who thinks Ace Ventura: Pet Detective is the funniest movie ever made might serve to warn you off one you might otherwise have gone to see. Preparing recommendations for a new customer using an automated collaborative filtering system has three steps: 1. Building a customer profile by getting the new customer to rate a selection of items such as movies, songs, or restaurants. 2. Comparing the new customers profile with the profiles of other customers using some measure of similarity. 3. Using some combination of the ratings of customers with similar profiles to predict the rating that the new customer would give to items he or she has not yet rated. The following sections examine each of these steps in a bit more detail. Building Profiles One challenge with collaborative filtering is that there are often far more items to be rated than any one person is likely to have experienced or be willing to rate. That is, profiles are usually sparse, meaning that there is little overlap among the users preferences for making recommendations. Think of a user profile as a vector with one element per item in the universe of items to be rated. Each element of the vector represents the profile owners rating for the corresponding item on a scale of -5 to 5 with 0 indicating neutrality and null values for no opinion. If there are thousands or tens of thousands of elements in the vector and each customer decides which ones to rate, any two customers profiles are likely to end up with few overlaps. On the other hand, forcing customers to rate a particular subset may miss interesting information because ratings of more obscure items may say more about the customer than ratings of common ones. A fondness for the Beatles is less revealing than a fondness for Mose Allison. A reasonable approach is to have new customers rate a list of the twenty or so most frequently rated items (a list that might change over time) and then free them to rate as many additional items as they please. Comparing Profiles Once a customer profile has been built, the next step is to measure its distance from other profiles. The most obvious approach would be to treat the profile vectors as geometric points and calculate the Euclidean distance between them, but many other distance measures have been tried. Some give higher weight to agreement when users give a positive rating especially when most users give negative ratings to most items. Still others apply statistical correlation tests to the ratings vectors. Making Predictions The final step is to use some combination of nearby profiles in order to come up with estimated ratings for the items that the customer has not rated. One approach is to take a weighted average where the weight is inversely proportional to the distance. The example shown in Figure 8.7 illustrates estimating the rating that Nathaniel would give to Planet of the Apes based on the opinions of his neighbors, Simon and Amelia.  Figure 8.7 The predicted rating for Planet of the Apes is -2.66. 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 [ 102 ] 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 167 168 169 170 171 172 173 174 175 176 177 178 179 180 181 182 183 184 185 186 187 188 189 190 191 192 193 194 195 196 197 198 199 200 201 202 203 204 205 206 207 208 209 210 211 212 213 214 215 216 217 218 219 220 221 222 |