|

|

|

Промышленный лизинг

Методички

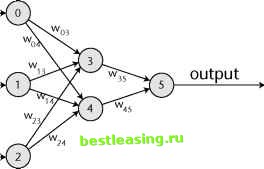

The Schema Theorem explains that genetic algorithms are really searching through the possible schemata to find fit building blocks that survive from one generation to the next. A natural question is how many building blocks are typically being processed? We will spare the reader the details, but Holland showed that the number of schemata being processed by a population of n genomes is proportional to n3. This means that each generation is really evaluating n3 different schemata, even though it is only doing work on n different genomes. Holland calls this property implicit parallelism. The computational effort for a genetic algorithm is proportional to the size of the population, and in this effort, the algorithm is usefully processing a number of schemata proportional to n3. The property of implicit parallelism should not be confused with explicit parallelism that is available when running genetic algorithms on a distributed network of workstations or on a computer with multiple processors. The Schema Theorem gives us insight into why genomes work better when there are only two symbols (Os and 2 s) in the representation. Finding the best building blocks requires processing as many schemata as possible from one generation to the next. For two symbols, the number of different genomes of a given length is 2length and the number of different schemata is 3length. Roughly, the number of unique schemata being processed by a single genome is about 1.5length. Now, what happens if there are more symbols in the alphabet, say by adding 2 and 3? Now the number of genomes of a given length is 4length, and the number of different schemata is 5length (because the asterisk adds one more symbol). Although there are more schemata, the number of schemata corresponding to a given genome is only 1.25length. As the number of symbols increases, the relative number of schemata decreases. Another way of looking at this is to consider the schema *OO. If there are only two letters in the alphabet, then only two genomes process this schema, OOO and 1OO. If there are four letters, then there are four genomes: OOO, 1OO, 2OO, and 3OO. Since genetic algorithms are trying to find the best schemata using a given population size, the additional genomes do not help the search. Schemata are the building blocks of the solutions, and using only two symbols allows the maximum number of schemata to be represented in a given population size. These estimates are not exact, but they are suggestive. More rigorous treatment confirms the result that an alphabet of two symbols is optimal from the point of view of processing schemata. More Applications of Genetic Algorithms Genetic algorithms have been used to solve some real problems. This section talks about two applications of genetic algorithms. The first is their application to neural networks and the second is their application to predictive modeling. Application to Neural Networks Neural networks and genetic algorithms are natural allies. One of the strengths of genetic algorithms is their ability to work on black boxes-that is, on problems where the fitness function is available but the details of the calculations are not known. The use of genetic algorithms to train neural networks is a good example, although this method of training is not common. Figure 13.4 illustrates a simple neural network with three input nodes, a hidden layer with two nodes, and a single output node. The key to making the network work well is adjusting the weights on its edges so that the output produces the right answer for appropriate inputs. Chapter 7 discussed the nature of the functions inside the nodes and how standard training algorithms proceed. For the current discussion, all we need is that the network can produce an output for any given set of weights and inputs. The weights are real numbers, and there is a training set that includes inputs and the corresponding correct output. input 1 input 2 input 3  Without really even understanding how a neural network works, the weights can be gathered into a genome so a genetic algorithm can optimize them.  Each weight gets represented by some number of bits. Figure 13.4 A neural network is described by weights that genetic algorithms can optimize. The first problem faced is to determine what a genome looks like. The genome consists of all the weights in the network glommed together. What is the fitness function? The fitness function creates a network using the weights and then applies this model to the training set. The fitness function then compares the predicted output of this neural network to the actual output; hence, the fitness function is defined as the overall error of the neural network with those weights on the training set. The genetic algorithm proceeds by minimizing this function. Another application to neural networks is determining the topology of the network-how many nodes should be in the hidden layer and what activation functions should be used. Different topologies can be described using different sets of weights, and then the genetic algorithms can proceed to find the best ones. In this case, the fitness function creates the network described by the genome, and then trains the network using standard training methods, and the error from the best network is used for the fitness function. This is an example of genetic algorithms being used to evolve optimal solutions for a complicated problem. Case Study: Evolving a Solution for Response Modeling A more interesting use of genetic algorithms is to solve a real business problem. Direct feedback from customers is a powerful source of information for businesses. When a customer makes a complaint, the company has an opportunity to make a good impression by fixing the problem promptly or, if it is too late for that, by making up for the problem somehow. For some companies, such as product goods manufacturers, complaints provide dates of actual product use-a bit of additional information to add to manufacturing and shipping dates. Customer complaints also hand companies an opportunity to improve processes so that they have fewer dissatisfied customers in the future. In our work building retention models for mobile phone companies, we have seen situations where customers who make calls to customer service are more loyal than other customers. Apparently, responding to the expressed needs of customers can make them happier and more loyal, especially when the response is prompt and appropriate. At another mobile phone company, calls to customer service indicated a higher probability of churn, due no doubt to the long wait periods at their call centers. This case study talks about classifying complaints for routing complaints versus compliments, using the ideas of genetic algorithms. Business Context The custom service department of a major international airline processes many customer comments, which arrive via several channels: 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 [ 154 ] 155 156 157 158 159 160 161 162 163 164 165 166 167 168 169 170 171 172 173 174 175 176 177 178 179 180 181 182 183 184 185 186 187 188 189 190 191 192 193 194 195 196 197 198 199 200 201 202 203 204 205 206 207 208 209 210 211 212 213 214 215 216 217 218 219 220 221 222 |