|

|

|

Промышленный лизинг

Методички

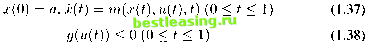

paper. A similar situation is also discussed in this book, which is called the chosen cost, in Section 2.8. An application of an optimal control problem in which the cost function does not have the full variation of control can also utilize this method by adjusting the penalty constant appropriately to obtain a smoother control without changing the optimum of the original cost function. There are some relevant references [55, 1987], [2, 1976], [66, 1977] for this problem. 7.4 Optimal switching control computational method In Li [53, 1995], a computational method was developed for solving a class of optimal control problems involving the choice of a fixed number of switching time points t\, t2,..., tx which divide the systems time horizon [0, 7] into K time periods. A subsystem Si is selected for each time periods [ti-i,tj], from a finite number of given candidate subsystems, which run in that time period. All the K subsystems and K - 1 switching times will be taken as decision variables. These decision variables form candidate selection systems , which will lead the optimal control problem to a minimum. Here, the optimal control problem is only considered over a finite time horizon. In this method, the original problem is transformed into an equivalent optimal control problem with system parameters by using some suitable constraints on the co-efficient of the linear combination, which is formed by the candidate subsystems, and using a time re-scaling technique. Many control problems related to the system dynamics that are subject to sudden changes can be put into this model. In recent years, general optimal switching control problems have been studied. The optimality principle is applied and existence of optimal controls is discussed. Basically the problems are formulated as optimal control problems , in which the feasible controls are determined by appropriate switching functions. There are relevant references available in the bibliography [53, 1995], [87, 1989], [88, 1991], [27, 1979]. This situation has problems involving both discrete and continuous decisions represented by the subsystems and switching times. A transformed technique is introduced for solving this mixed discrete and continuous optimal control problem. The basic idea behind this technique is transforming the mixed continuous-discrete optimal control problem into an optimal parameter selection problem [84, 1991], which only deals with continuous decision variables. Since the transformed problem still involves the switching times located within subdivisions, which make the numerical solution of such an optimal control problem difficult. Another transformation is introduced to further transform the problem into an optimal control problem with system parameters. The control is taken as the lengths of the switching intervals as parameters. The second equivalent optimal control problem can be solved by standard optimal control techniques. A numerical problem was solved in Lis paper [53, 1995] by using this computational method. This kind of optimal control problem has sudden changes in the dynamics at switching time, and therefore has a mixed continuous-discrete nature. Switching times, a time scaling and a control function are introduced to deal with the discontinuities in the system dynamics. The control function is a piece-wise constant function with grid-points corresponding the discontinuities of the original problem, hence allowing the general optimal control software to solve the problem. 7.5 SCOM In Craven and Islam [18, 2001] (See also Islam and Craven [38, 2002]), a class of optimal control problems in continuous time were solved by a computer software package called SCOM, also using the MATLAB system. As in the MISER [33, 1987] and OCIM [15, 1998] packages, the control is approximated by a step-function. Because of the matrix features in MATLAB, programming is made easier. Finite difference approximations for gradients give some advantages for computing gradients. In this paper, end-point conditions and implicit constraints in some economic models are simply handled. Consider an optimal control problem of the form: MIN,(.))U(.} J°(x,u) := £ f(x(t),u(t),t)d + Ф(х(1)) subject to:  The end-point term and constraints can be handled by a penalty term; its detailed description will be introduced in Section 4.4. The concern here is only with how this computational algorithm works. The differential equation (1.39) with initial condition, determines x(.) from u(.); Denote x(t) = Q(u)(.). The interval [0,1] is then divided into N equal subintervals, and u(.) is approximated by a step-function taking values ui,u2,... ,un on the successive subintervals. An extra constraint и e V is added to the given problem, where Vis the subspace of such step-functions. Consequently, x(.) becomes a polynomial function, determined by its values xq, x\,...,xn at the grid-points Since there are discontinuities at the grid-points on the right side of the differential equation which is a non-smooth function of t, a suitable differential equation solver must be chosen for such functions. Many standard solvers do not have this feature. Only one of the six ODE solvers in MATLAB is designed for stiff differential equations. However, this ODE solver is not used in this book. Instead, a good computer software package nqq is used to deal with the jumps on the right side of the differential equation in the dynamic system of the optimal control problems. The fourth order Runge-Kutta method [30, 1965] (a standard method for solving ordinary differential equations) is slightly modified for solving a differential equation of the form x(t) = m(x(t),u(t)), where u(.) is a step-function. The modification is simply recording the counting number j and time t to make sure that u(t) always takes the appropriate value not iij+i insubinterval [j/N, (j + l)/N] when t = (j + l)/iV. In the computation, the differential equation is computed forward (starting at t = 0), while the adjoint equation is solved backward (starting at Two steps are introduced to solve such optimal control problems: 1 Compute objective values from the objective function, differential equation, and augmented Lagrangian, not compute gradients from the adjoint equation and Hamiltonian. That assumes gradients can be estimated by finite differences from what to be computed. 2 Compute objective values and gradients from the adjoint equation and Hamil-tonian. Implicit Constraints: in some economic models, such as the model [48, 1971 ] to which Craven and Islam have applied the SCOM package in the paper [18, 2001], fractional powers of the functions (with x(t)P in the right side of the differential equation where 0 < /3 < 1) appear, then some choices of u(.) will lead to causing the solver to crash. The requirement of forms an implicit constraint. A finite-difference approximation to gradients is useful as approximations over a wider domain in this case. As mentioned in Section 1.7.1, linear interpolation can also be used in solving gradients and co-state functions. Increasing the number of the subintervals N will get better results. It will be discussed later in Section 2.7 and 2.8. Two problems were tested using SCOM in a paper by Craven and Islam [18, 2001], and accurate results were found for both. In Craven and Islam [18, 2001], constr estimated gradients by finite differences to compute the optimum. The economic models in this paper [18, 2001] with implicit constraints are different from the models that were solved by other computer software packages. However, this computer software also needs to be further developed for more general use. 1 2 3 4 [ 5 ] 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 |